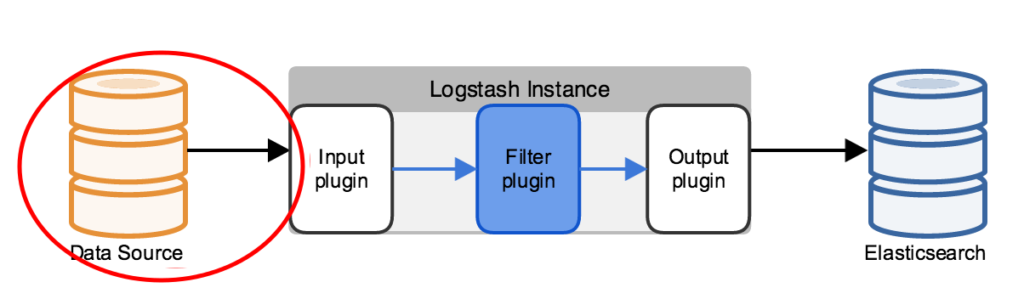

Written in Go, the concept behind Lumberjack was to develop a network protocol that would be more efficient in handling large bulks of data, have a low memory footprint, and support encryption.Ī dramatic turn of events led to Lumberjack being renamed the Logstash-Forwarder, with the former now constituting only the network protocol and the latter being the actual logging program. Lumberjack was initially developed as an experiment for outsourcing the task of data extraction and was meant to be used as a lightweight shipper for collecting logs before sending them off for processing in another platform (such as Logstash). There’s nothing like a logging lyric to clarify things, hey? ♫ I’m a lumberjack and I’m ok! I sleep when idle, then I ship logs all day! I parse your logs, I eat the JVM agent for lunch! ♫ ( source based on this Monty Python skit) And Along Came Lumberjack (and Later, Logstash-Forwarder) This pain point became the catalyst of change. Logstash requires JVM to run, and this dependency coupled with the implementation in Ruby became the root cause of significant memory consumption, especially when multiple pipelines and advanced filtering are involved. Well, there was, and still is, one outstanding issue with Logstash, and that is - performance. This is the role played by Logstash - it handles the tasks of pulling and receiving the data from multiple systems, transforming it into a meaningful set of fields and eventually streaming the output to a defined destination for storage ( stashing). To be able to deploy an effective centralized logging system, a tool that can both pull data from multiple data sources and give meaning to it is required. Logstash was originally developed by Jordan Sissel to handle the streaming of a large amount of log data from multiple sources, and after Sissel joined the Elastic team (then called Elasticsearch), Logstash evolved from a standalone tool to an integral part of the ELK Stack (Elasticsearch, Logstash, Kibana). This post will attempt to shed some light on what makes these two tools both alternatives to each other and complementary at the same time by explaining how the two were born and providing some simple examples. The new Filebeat modules can handle processing and parsing on their own, clouding the issue even further. With the introduction of Beats, the growth in both their popularity, and the number of use cases, people are inquiring whether the two are complementary or mutually exclusive.

So, why the comparison? Well, people are still getting confused by the differences between the two log shippers. In most cases, we will be using both in tandem when building a logging pipeline with the ELK Stack because both have a different function. Yes, both Filebeat and Logstash can be used to send logs from a file-based data source to a supported output destination. How can these two tools even be compared to start with? Modify input and output sections of nfo.Anyone using ELK for logging should be raising an eyebrow right now.

#LOGSTASH INJEST FILEBEATS OUTPUT DOWNLOAD#

Download and add the following files to the logstash configurations conf.d directory (e.g.

#LOGSTASH INJEST FILEBEATS OUTPUT INSTALL#

Installation Steps Install the following components: Logstash has a larger footprint, but enables you to filter and transform data, adding calculated fields at index time, if necessary.

0 kommentar(er)

0 kommentar(er)